Sysadmin

Jump to navigation

Jump to search

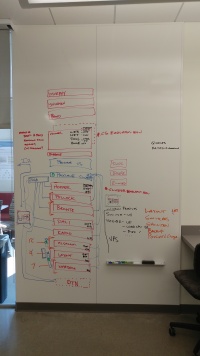

Machines and Brief Descriptions of Services

CS Machines

| NET (vm1) |

| LDAP Server DNS DHCP Backup to Dali: etc, var |

| WEB (vm2) |

| Mailman Mail Stack Apache2 PostgresQL MySQL Wiki Backup to Dali: etc, var |

| TOOLS (vm3) |

| SageNB Server Jupyterhub Server Software Modules NginX SSH Users Backup to Dali: etc, var, mnts, sage |

| BABBAGE |

| Firewall |

| PROTO |

| Weather Monitoring GPS/NTP Energy Monitoring Backup to Dali: etc, var |

| CONTROL |

| Users SSH HOME TOOLS Backup to Dali: etc, var |

| SMILEY |

| XenDocs NET WEB NFS Backup to Dali: etc, var |

| SHINKEN |

| Users SSH Add machines |

| MURPHY |

| Elderly email stack Users SSH |

Cluster Machines

| HOPPER |

| Users SSH NFS server LDAP server Software Modules PostgreSQL Wiki Apache2 DNS DHCP Backup to Indiana: etc, var, cluster |

| INDIANA |

| New Storage Server |

| DALI |

| Storage Server Gitlab Backups NginX Backup to Indiana (/media/r10_vol/backups/): etc, var/opt/gitlab/backups |

| AL-SALAM |

| WebMO Software Modules Apache2 Backup to Indiana: etc, var |

| WHEDON |

| Software Modules Backups to Indiana: etc, var |

| LAYOUT |

| Jupyterhub Server Software Modules NginX Apache2 WebMO Backup to Indiana: etc, var |

| BRONTE |

| Software Modules Backup to Indiana: etc, var, nbserver |

| POLLOCK |

| Software Modules WebMO NginX Backup to Indiana: etc, var |

| KAHLO |

| Storage Server Backups NginX Backup to Indiana: etc, var |

| BIGFE |

| Software Modules Hosts BCCD related repositories and distributions. |

| T-VOC |

| Software Modules |

| ELWOOD |

| Software Modules Used by BCCD to host www.bccd.net and www.littlefe.net. Will be deprecated when BCCD project offloads their sites onto cloud-based hosting platforms. |

| krasner |

| Docker platform on an old lovelace machine upgraded to have 16GB of RAM. |

Switches

| SG538SF02J |

|

| CN63FP762S |

|

| SG525SG025 |

|

| Netgear JGS524 |

|

| cs-main |

|

| 5500denniscs-sw1 |

|

Systems Administration Documentation

For old documentation, see: Old Wiki Information

Current Projects

This is the list we will work from in addition to service requests.

Some important procedural pages:

- Use the Sysadmin task template if you're starting a new project. Copy and paste the wiki source page and populate the basic fields.

- This is a new way of task processing. It's subject to change.

- You can see our Open Tasks here.

- We will also start filling up the Closed Tasks category.

Please update specific projects at their own page.

- Power down before outages

- Web logins

- Password management

- Docker and WebODM on Bronte

- Fix shinken server access

- Verify Lovelace DNS - check what machines we have vs. what's in the DNS file

- Layout Layout

- Backup in Lilly basement

- FIFO for requests rather than ad-hoc

- Accounting for hours logged

- key-based access to whedon, pollock, bronte (not just passwords)

- Power map additions and updates

- Backup on all machines - includes backup.cs.e.e (indiana?)

- Fix Lovelace machines

Post-shutdown, here are things that need fixed, updated, etc.:

- Al-salam: PDU was powered off when we got to the basement - nodes 1-4 and 9-12 are connected to the PDU so they were powered down. Unsure when 9-12 were connected to the PDU; 1-4 were the only al-salam nodes connected this summer.

- Sudo for whedon only pwd required

- Hard to force shutdown on hopper

- Babbage slow to shut down, had to reboot (even in the shell, shutdown -h now only rebooted it)

- Mounting FS in smiley, had to run: mount --source=/dev/vmdata/eccs-home-disk/ --target=/smiley-eccs-home-disk

- Pollock needed manual ifup

- ganglia monitoring comes back up on some nodes (definitely on head nodes) but needs to be started on compute nodes

- Are sysadmin accounts backing up to anywhere?

- How much power are we drawing at max from everything? (PDU, burnout, etc.)